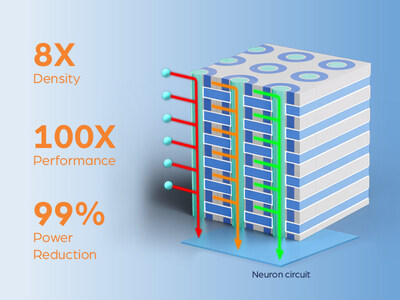

3D X-AI Chip Accelerates AI performance by 100x

I think we’ll see a lot more of these types of AI-driven announcements in the near term.

NEO Semiconductor unveiled 3D X-AI chip technology that will replace existing DRAM chips inside high bandwidth memory (HBM). The new chips address data bus bottlenecks, enabling AI processing in 3D DRAM. Not only can 3D X-AI reduce the staggering amount of data transferred between HBM and GPUs during AI workloads, NEO claims it will revolutionize the performance, power consumption, and cost of AI Chips for AI applications, including generative AI.

The NEO 3D X-AI Chip uses 3D memory to perform AI operations while providing 100X performance acceleration using 8,000 neuron circuits, so AI processing happens in 3D memory. They also deliver 99% power reduction, minimizing the need to transfer data to the GPU for calculation, and 8x memory density with its 300 memory layers that allow HBM to store larger AI models.

A single 3D X-AI die includes 300 layers of 3D DRAM cells with 128 Gb capacity and one layer of neural circuit with 8,000 neurons. This supports up to 10 TB/s of AI processing throughput per die. Twelve 3D X-AI dies stacked with HBM packaging achieves 120 TB/s processing throughput, resulting in a 100X performance increase.

What this means for designers today is accelerated emerging AI use cases while promoting the creation of new ones.

NEO Semiconductor is showcasing its technologies at FMS: the Future of Memory and Storage, booth #507.