How to use Raspberry Pi to make a wheelchair controlled by eye movements

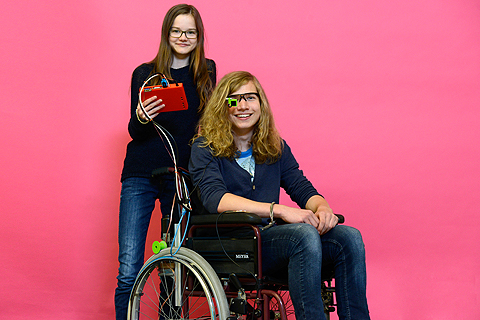

A team of teens for Germany have constructed a system for controlling a wheelchair with just eye movements.

Myrijam Stoetzer and Paul Foltin used a standard webcam and removed the infrared filter to track eye movements. A person’s eye is illuminated by infrared light from LEDs which allows the system to work in low-light.

The teens used a Raspberry Pi to process the video stream and obtain the position of a pupil. Then they compared it to with adjustable preset values representing forward, reverse, left and right eye movements for tracking.

The eye commands are confirmed with a switch, but eventually it will be hooked up to a system that allows a user to confirm just by moving his or her tongue or cheek.

In order to control the 3D-printed wheelchair wheels, they used Arduino controls made of recycled windshield wiper motors and relays. They even 3D-printed the camera casing!

The wheelchair is getting an even bigger high-tech update according to the Raspberry Pi blog. “The latest feature addition is a collision detection system using IR proximity sensors to detect obstacles.”

Stoetzer and Foltin used to work mainly with Lego Mindstorms, but have taken on the wheelchair as their first Raspberry Pi project. They’re using Python with the OpenCV image processing library for their first project and teaching themselves as they go along.

The two have been competing in a German science competition for young people and are constantly refining and extending the system as they progress through the competition. They have moved on from a model robotic platform to a real second-hand wheelchair. They’re even using their prize money from earlier rounds to fund improvements for later stages.

Story via Rasberry Pi.