Researchers from the Georgia Institute of Technology have developed an ultra-low power hybrid chip inspired by the brain that could help give palm-sized robots the ability to collaborate and learn from their experiences.

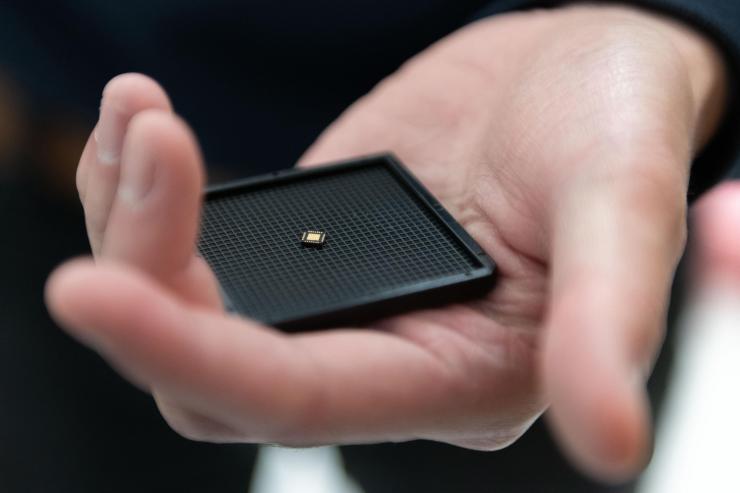

The new integrated circuit (ASIC) operates on milliwatts of power and when combined with new generations of low-power motors and sensors, could help intelligent swarm robots operate for hours instead of minutes.

To conserve power, the chips use a hybrid digital-analog time-domain processor in which the pulse width of signals encodes information. The neural network IC accommodates both model-based programming and collaborative reinforcement learning, potentially providing the small robots larger capabilities for reconnaissance, search-and-rescue and other missions.

According to Arijit Raychowdhury, associate professor in Georgia Tech’s School of Electrical and Computer Engineering, the team’s goal is to make very small robots very intelligent so that they can learn about their environments and move around independently.

“To accomplish that, we want to bring low-power circuit concepts to these very small devices so they can make decisions on their own. There is a huge demand for very small, but capable robots that do not require infrastructure,” said Raychowdhury.

To demonstrate these ultra-low power chips, the team used robotic cars driven by the unique ASICs at the 2019 IEEE International Solid-State Circuits Conference (ISSCC). The navigated through an arena floored by rubber pads and surrounded by cardboard block walls. As they searched for a target, the robots were tasked with avoiding traffic cones and each other, learning from the environment and continuously communicating with each other.

Watch it Now

How They Work

The cars use inertial and ultrasound sensors to determine their location and detect objects around them. Information from the sensors goes to the hybrid ASIC, which serves as the “brain” of the vehicles. Instructions then go to a Raspberry Pi controller, which sends instructions to the electric motors.

In palm-sized robots, three major systems consume power: the motors and controllers used to drive and steer the wheels, the processor, and the sensing system. In the cars built by Raychowdhury’s team, the low-power ASIC means that the motors consume the bulk of the power. “We have been able to push the compute power down to a level where the budget is dominated by the needs of the motors,” he said.

The team is working with collaborators on motors that use micro-electromechanical (MEMS) technology able to operate with much less power than conventional motors.

“We would want to build a system in which sensing power, communications and computer power, and actuation are at about the same level, on the order of hundreds of milliwatts,” said Raychowdhury, who is the ON Semiconductor Associate Professor in the School of Electrical and Computer Engineering. “If we can build these palm-sized robots with efficient motors and controllers, we should be able to provide runtimes of several hours on a couple of AA batteries. We now have a good idea what kind of computing platforms we need to deliver this, but we still need the other components to catch up.”

In time domain computing, information is carried on two different voltages, encoded in the width of the pulses. That gives the circuits the energy-efficiency advantages of analog circuits with the robustness of digital devices.

“The size of the chip is reduced by half, and the power consumption is one-third what a traditional digital chip would need,” said Raychowdhury. “We used several techniques in both logic and memory designs for reducing power consumption to the milliwatt range while meeting target performance.”

With each pulse-width representing a different value, the system is slower than digital or analog devices, but Raychowdhury says the speed is sufficient for the small robots. (A milliwatt is a thousandth of a watt).

“For these control systems, we don’t need circuits that operate at multiple gigahertz because the devices aren’t moving that quickly,” he said. “We are sacrificing a little performance to get extreme power efficiencies. Even if the compute operates at 10 or 100 megahertz, that will be enough for our target applications.”

The 65-nanometer CMOS chips accommodate both kinds of learning appropriate for a robot. The system can be programmed to follow model-based algorithms, and it can learn from its environment using a reinforcement system that encourages better and better performance over time – much like a child who learns to walk by bumping into things.

“You start the system out with a predetermined set of weights in the neural network so the robot can start from a good place and not crash immediately or give erroneous information,” Raychowdhury said. “When you deploy it in a new location, the environment will have some structures that it will recognize and some that the system will have to learn. The system will then make decisions on its own, and it will gauge the effectiveness of each decision to optimize its motion.”

Communication between the robots allows them to collaborate to seek a target.

“In a collaborative environment, the robot not only needs to understand what it is doing but also what others in the same group are doing,” he said. “They will be working to maximize the total reward of the group as opposed to the reward of the individual.”

With their ISSCC demonstration providing a proof-of-concept, the team is continuing to optimize designs and is working on a system-on-chip to integrate the computation and control circuitry.

Story via Georgia Institute of Technology.