There may be a new tool for game studio and enthusiasts, to speed up game development and experiment with different styles of play.

Georgia Institute of Technology researchers have developed a new approach using an artificial intelligence to learn a complete game engine, the basic software of a game that governs everything from character movement to rendering graphics.

Their AI system watches less than two minutes of gameplay video and then builds its own model of how the game operates by studying the frames and making predictions of future events, such as what path a character will choose or how enemies might react.

To get their AI agent to create an accurate predictive model that could account for all the physics of a 2D platform-style game, the team trained the AI on a single ‘speedrunner’ video, where a player heads straight for the goal.

This made “the training problem for the AI as difficult as possible.”

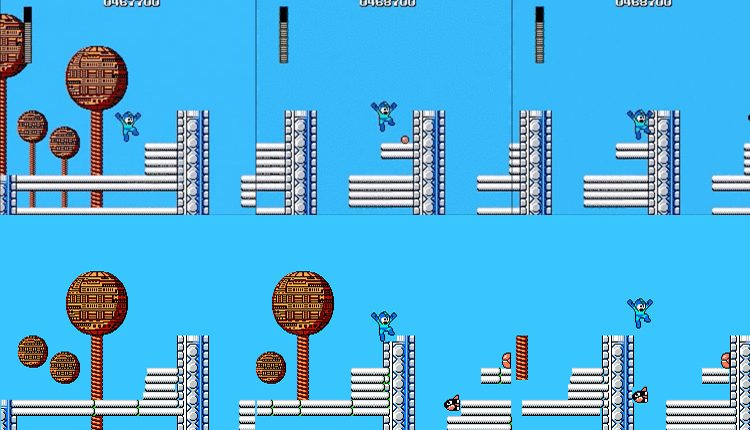

Their current work uses Super Mario Bros. and they’ve started replicating the experiments with Mega Man and Sonic the Hedgehog as well.

The same team first used AI and Mario Bros. gameplay video to create unique game level designs. The researchers found that their game engine predicted video frames significantly more similar to those in the original game when compared to the same test on a neural network.

This gave them an accurate, general model of a game using only the video footage.

“Our AI creates the predictive model without ever accessing the game’s code, and makes significantly more accurate future event predictions than those of convolutional neural networks,” said Matthew Guzdial, lead researcher and Ph.D. student in computer science.

“A single video won’t produce a perfect clone of the game engine, but by training the AI on just a few additional videos you get something that’s pretty close.”

They next tested how well the cloned engine would perform in actual gameplay. They employed a second AI to play the game level and ensure the game’s protagonist wouldn’t fall through solid floors or go undamaged if hit by an enemy.

The results are in…

The results: the AI playing with the cloned engine proved indistinguishable compared to an AI playing the original game engine.

“The technique relies on a relatively simple search algorithm that searches through possible sets of rules that can best predict a set of frame transitions,” said Mark Riedl, associate professor of Interactive Computing and co-investigator on the project. “To our knowledge this represents the first AI technique to learn a game engine and simulate a game world with gameplay footage.”

The current cloning technique works well with games where much of the action happens on-screen. Guzdial said Clash of Clans or other games with action taking place off-screen might prove difficult for their system.

“Intelligent agents need to be able to make predictions about their environment if they are to deliver on the promise of advancing different technology applications,” he said. “Our model can be used for a variety of tasks in training or education scenarios, and we think it will scale to many types of games as we move forward.”

Comments are closed, but trackbacks and pingbacks are open.