An AI Tool to Detect AI-generated Text

(Note from the editor: Think you can spot AI-written content? I had AI write one of the paragraphs below, and Carolyn wrote the rest. Guess which one you think it is, and I’ll let you know at the end of the story.)

Machine-generated text has been fooling people for four years. Once GPT -2 was introduced in 2019, large language model (LLM) tools improved in crafting stories, news articles, and student essays, so humans often cannot recognize when they are reading text produced by an algorithm. Overusing these LLM “writers” can lead to misuse and harmful outcomes, and the inability to detect AI-generated text enhances the potential for harm.

AI tools designed to detect AI-written content are becoming increasingly sophisticated as AI-generated text proliferates across various platforms. These detection tools utilize advanced algorithms to analyze linguistic patterns, sentence structures, and word choices characteristic of machine-generated text. By comparing the content with known human and AI writing samples, these tools can identify subtle differences in style, coherence, and complexity that might not be easily noticeable to a human reader.

So, machine learning models can identify subtle patterns of word choice and grammatical constructions to recognize LLM-generated text in a way that human intuition does not. Today, many commercial detectors claim to be highly successful at detecting machine-generated text with up to 99% accuracy, but are these claims too good to be true?

Chris Callison-Burch, a Computer and Information Science Professor, and Liam Dugan, a doctoral student in Callison-Burch’s group, analyzed accuracy in a recent paper published at the 62nd Annual Meeting of the Association for Computational Linguistics.

Callison-Burch claims, “It’s an arms race, and while the goal to develop robust detectors is one we should strive to achieve, there are many limitations and vulnerabilities in detectors that are available now.”

The research team created Robust AI Detector (RAID), a data set of over 10 million documents across recipes, news articles, blog posts, and more, including both AI-generated text and human-generated text. RAID is the first standardized benchmark to test detection ability in current and future detectors. In addition to creating the data set, they made a leaderboard to publicly rank detector performance in an unbiased way. They generated interest almost immediately.

What was the result of the analysis? RAID shows that many detectors fall short. “Detectors trained on ChatGPT were mostly useless in detecting machine-generated text outputs from other LLMs such as Llama and vice versa,” says Callison-Burch. “Detectors trained on news stories don’t hold up when reviewing machine-generated recipes or creative writing. What we found is that there is a myriad of detectors that only work well when applied to very specific use cases and when reviewing text similar to the text they were trained on.”

Credit: Chris Callison-Burch and Liam Dugan

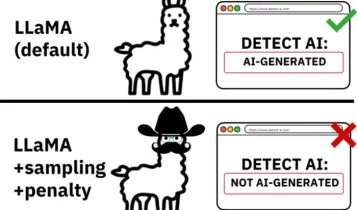

Detectors can detect AI-generated text when there are no edits or “disguises,” but current detectors cannot reliably detect AI-generated text when manipulated.

“If universities or schools were relying on a narrowly trained detector to catch students’ use of ChatGPT to write assignments, they could be falsely accusing students of cheating when they are not,” says Callison-Burch. “They could also miss students who were cheating by using other LLMs to generate their homework.”

Openly evaluating detectors on large, diverse, shared resources is critical to accelerating progress and trust in detection. That transparency will lead to developing detectors that hold up in various use cases.

The AI-written paragraph was #2, the one starting with “AI tools designed to detect AI-written content are becoming increasingly sophisticated…”

Did you guess correctly?