In his later years, Dr. Stephen Hawking’s electronically generated voice was enhanced through artificial intelligence (AI) that predicted his next words the same way predictive text works on our smartphones.

But what if augmented reality technology could have helped Hawking physically interact with his environment, completing countless tasks many of us take for granted?

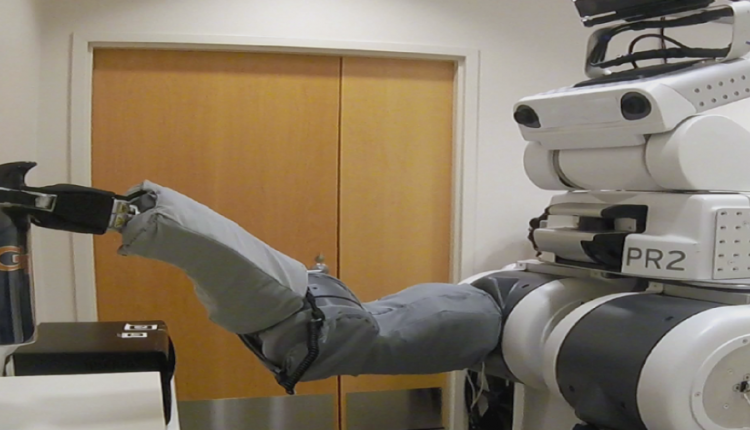

Thanks to new developments out of the Georgia Institute of Technology, future generations of individuals with similar motor neuron diseases or limited mobility for other reasons could interact with the world using a robot, called PR2, and AR software. The technology could allow individuals to feed themselves and perform routine personal care tasks.

The web-based interface displays a “robot’s eye view” of surroundings, while clickable controls overlaid on the view allow users to move the robot and control the robot’s hands and arms. When users move the robot’s head, for instance, the screen displays the mouse cursor as a pair of eyeballs to show where the robot will look when the user clicks. Clicking on a disc surrounding the robotic hands allows users to select a motion. While driving the robot around a room, lines following the cursor on the interface indicate the direction it will travel.

In one study of 15 people, 80% of the users were able to use the robot to perform simple tasks using standard assistive computer access technologies such as eye trackers and head trackers, which they were already using to control their personal computers.

“Our results suggest that people with profound motor deficits can improve their quality of life using robotic body surrogates,” said Phillip Grice, a recent Georgia Institute of Technology Ph.D. graduate who is first author of the paper. “We have taken the first step toward making it possible for someone to purchase an appropriate type of robot, have it in their home and derive real benefit from it.”

Story via Georgia Institute of Technology