Can Memristors Save AI from Forgetting Everything It Learned?

Imagine spending months designing a neural network that flawlessly recognizes faces—only to have it forget everything the moment you teach it to recognize traffic signs. It’s called catastrophic forgetting, and it’s one of the biggest thorns in AI’s side.

But what if we told you a new kind of memristor—an analog/digital hybrid component inspired by the human brain—could change all that?

What Exactly Is a Memristor, and Why Should You Care?

If you’re an electrical design engineer, you’ve likely been watching memristors with interest (and maybe a bit of skepticism) for the past decade. In theory, these two-terminal components promise to bring memory and computation closer together—something traditional silicon struggles with. The big idea? A resistor that “remembers” its resistance state, even after power is removed. Unlike standard memory elements, memristors don’t just store 1s and 0s—they can capture gradients, levels, and nuance.

That’s why memristors are often called brain-like: they behave more like synapses than switches.

But up to now, they’ve had some serious limitations: short lifespans, unpredictable performance, and sensitivity to heat and mechanical stress. Not ideal for high-reliability applications—or for scaling.

That’s what makes this new research from Germany’s Forschungszentrum Jülich, led by Prof. Dr. Ilia Valov, such a potential game-changer.

A New Memristor for a New Age of AI

In a recent Nature Communications paper, Valov’s team unveiled a novel memristive device with features that could make it far more suitable for neuromorphic and memory-compute fusion systems.

Here’s what sets it apart:

- Robust electrochemical design

- Operates in both analog and digital modes

- Wider voltage window with lower switching thresholds

- Significantly lower failure rates during manufacturing

- More stable across thermal and electrical variations

The secret sauce? A new mechanism called Filament Conductivity Modification (FCM).

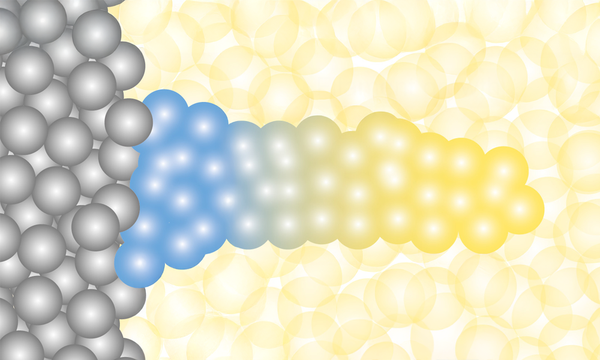

Traditional memristors rely on ECM (Electrochemical Metallization) or VCM (Valence Change Mechanism). ECM creates metallic filaments between electrodes that change resistance—fast, but unstable. VCM uses oxygen ion movement to modulate the Schottky barrier—stable, but power-hungry.

Valov’s FCM approach, however, uses metal oxide filaments formed by the movement of oxygen and tantalum ions. These filaments don’t fully dissolve. Instead, they change chemically—allowing more stable, fine-tuned control over resistance states without full resets.

It’s like building a bridge that doesn’t collapse every time you need to reroute traffic. Instead, you adjust the traffic flow using signals—less stress on the structure, more predictable performance.

Why This Matters for You

If you’re designing AI hardware, edge inference systems, or even next-gen memory architectures, this is the kind of breakthrough that could reshape how you allocate memory, design for endurance, and handle data retention in mixed-signal environments.

These new memristors offer:

- Seamless integration into analog signal paths

- Retention of previous weights during re-training

- Potential solutions to the problem of catastrophic forgetting

They’re also a step toward a long-standing goal in embedded AI: computation-in-memory.

This approach eliminates the von Neumann bottleneck by allowing processing and memory to exist in the same space. No more energy-intensive data shuffling. It’s faster, leaner, and better suited for power-constrained systems like autonomous vehicles or edge AI modules.

From Simulation to Application

Valov’s team has already run simulations using their new memristor model in artificial neural networks, showing strong accuracy across multiple image datasets.

But as with all promising research, there’s more work to do. The next step? Testing additional materials to push stability and efficiency even further.

Still, the implications are already stirring. For engineers at the forefront of neuromorphic design, this work hints at a future where learning systems aren’t so easily wiped clean—a future where machines learn a little more like we do.

And for those building tomorrow’s smarter devices, this might be the memristor worth remembering.

Images Credit: Chen, S., Yang, Z., Hartmann, H. et al., Nat. Commun., https://doi.org/10.1038/s41467-025-57543-w