How to Train AI Efficiently Without Wasting 30% of Power

A Smarter Way to Save Energy While Training AI Models

AI training consumes substantial energy, and up to 30% of it is wasted due to inefficient processing. Much of this waste occurs when large tasks are split across multiple GPUs (specialized processors). Since AI models today are too large for a single processor, training is split across thousands, but it’s nearly impossible to divide the tasks evenly. This uneven division means some processors finish faster than others, wasting energy because they still operate at full speed even if they’re waiting for other processors to complete their tasks.

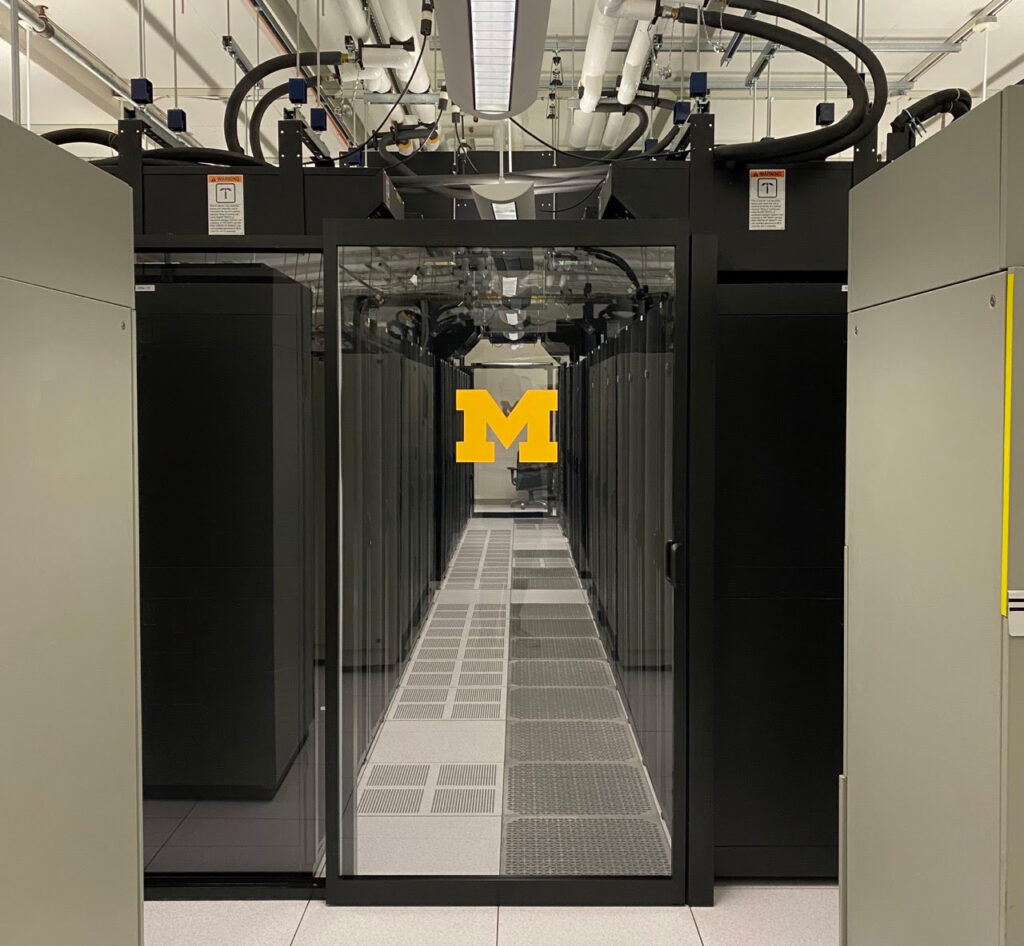

A recent study from the University of Michigan has revealed an innovative method to train large AI models like GPT-3, cutting energy use by up to 30%—all without slowing down training time. This approach could save enough power to meet the energy needs of over a million U.S. homes by 2026, and significantly reduce the carbon footprint of AI, which is projected to grow with increasing demand.

The Solution: Smarter Workload Management

To address this, the Michigan team developed a tool called Perseus. This software identifies the “critical path”—the longest chain of tasks necessary to complete the training—and then slows down processors that aren’t on this path. By aligning the completion times across processors, Perseus reduces unnecessary power usage without compromising performance.

“Why waste energy on something that doesn’t improve results?” said Mosharaf Chowdhury, U-M Associate Professor of Computer Science and Engineering, and lead author of the study. “If we can cut down on AI’s energy consumption, we can reduce its carbon footprint, cooling requirements, and fit more computing power within our existing energy resources.”

Testing Perseus with Large AI Models

The researchers tested Perseus on several models, including GPT-3 and other large language models, as well as a computer vision model. The results showed significant energy savings, highlighting Perseus as a promising solution for reducing the energy demands of AI.

Benefits for Equitable AI Access

Reducing the power requirements of AI could also make it more accessible globally. Lower energy costs mean more countries can afford to run large AI models without depending on remote services or compromising on model size and accuracy. “This could help level the playing field between communities with different access to computing power,” Chowdhury added.

Moving Forward

Perseus is now available as an open-source tool, integrated into Zeus, another tool for optimizing and measuring AI’s energy use. The project was funded by the National Science Foundation and other sponsors, with support from Chameleon Cloud and CloudLab for computational resources.

As AI becomes an increasingly integral part of our lives, smarter energy use will be essential to keeping it sustainable. Perseus offers a promising step forward, not just for energy savings but also for making AI more accessible and equitable worldwide.

Original Story: Up to 30% of the power used to train AI is wasted: Here’s how to fix it | University of Michigan News (umich.edu)