Meta-Semi—Semi-Supervised Learning Algorithm

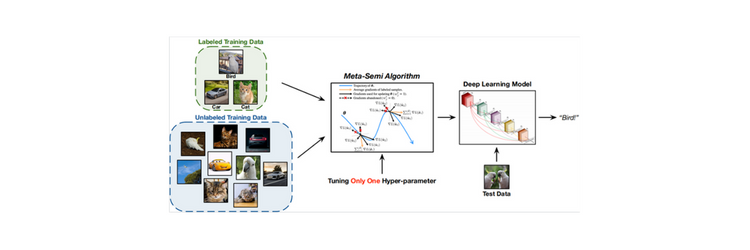

So far, deep learning semi-supervised learning algorithms are impractical in real semi-supervised learning scenarios, including medical image processing, hyper-spectral image classification, network traffic recognition, and document recognition. Recently, a research team proposed a novel meta-learning-based semi-supervised learning algorithm dubbed Meta-Semi. The algorithm requires tuning only one additional hyper-parameter and outperforms state-of-the-art semi-supervised learning algorithms. The team’s work is published in the journal CAAI Artificial Intelligence Research.

Deep learning is showing success in supervised tasks. Yet, data labeling, where the raw data is identified and labeled, is time-consuming and expensive. Abundant annotated training data fuel the success of deep learning in supervised tasks. Meta-semi effectively trains deep models with a small number of labeled samples.

The researchers exploit the labeled data while requiring only one additional hyper-parameter to achieve impressive performance under a variety of conditions. A hyper-parameter is a parameter whose value can be used to direct the learning process.

The team developed their algorithm, assuming the network could be trained effectively with the correctly pseudo-labeled unannotated samples. They generated soft pseudo labels for the unlabeled data online during the training process based on the network predictions, filtering out samples whose pseudo labels were incorrect or unreliable, and trained the model using the remaining data with relatively reliable pseudo labels. The result was a meta-learning formulation where the correctly pseudo-labeled data had a similar distribution to the labeled data.

Meta-Semi outperforms state-of-the-art semi-supervised learning algorithms significantly on challenging semi-supervised CIFAR-100 and STL-10 tasks, achieving competitive performance on CIFAR-10 and SVHN datasets or collections of images frequently used in training machine learning algorithms.