MIT Optimizes Robot Shapes Based on Terrain

MIT just developed a system that optimizes a robot’s shape based on an application’s intended terrain. The system simulates different shapes to establish which design is best for what the robot must do and where they will do it.

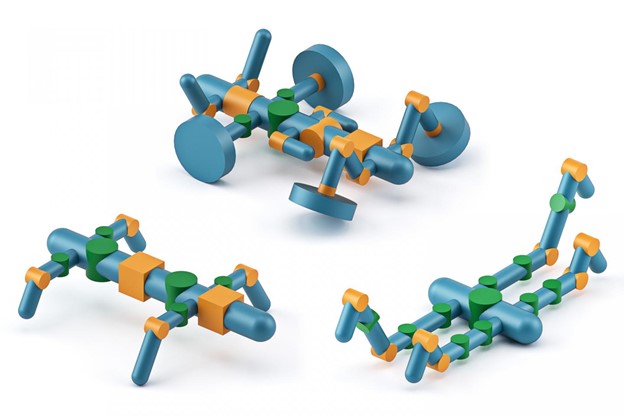

Dubbed “RoboGrammar,” the system matches the available robot parts and the terrain to be navigated. It then generates both an optimized structure and control program, eliminating a lot of the manual drudgery that is now necessary. It also does away with any assumptions that may be limiting designs.

The team, led by Allan Zhao, the paper’s lead author and a PhD student in the MIT Computer Science and Artificial Intelligence Laboratory, proposed that greater design innovation could improve functionality. So, they built a computer model for the task — a system that wasn’t unduly influenced by prior convention. They developed a “graph grammar” based on animals, specifically arthropods featuring a central body plus a variety of segments that may be legs—or even wheels.

RoboGrammar defines the problem, draws possible robotic solutions, and selects optimal ones, while humans input the set of available robotic components and the terrain to be traversed. The graph grammar designs hundreds of thousands of potential robot structures. The controller, which represents the instructions governing the movement sequence, is designed for each robot with an algorithm called Model Predictive Control, prioritizing rapid forward movement. The neural network algorithm iteratively samples and evaluates sets of robots, and learns which designs work better for a given task.

Zhao calls RoboGrammar a “tool for robot designers to expand the space of robot structures they draw upon.” The team plans to build and test optimal robots in the real world.