Next gen SoC addresses complexities of system architectures

The Gemini 3.0 cache-coherent network-on-chip IP that maximizes the performance of heterogeneous multicore system-on-chip (SoC) designs for cloud computing, automotive, mobile and IoT applications has been released by NetSpeed Systems. Many of today’s applications build in human-like comprehension and decision-making capabilities.

Applications such as Advanced Driver Assistance Systems (ADAS) offer users an augmented experience of the physical, real-world environment and include computer-generated sensory input such as sound, video, graphics or GPS data. The technologies underlying these applications, such as computer vision, facial recognition, voice recognition and other machine learning based capabilities require far more processing performance and much better power efficiency than is attainable with traditional multicore processor platforms.

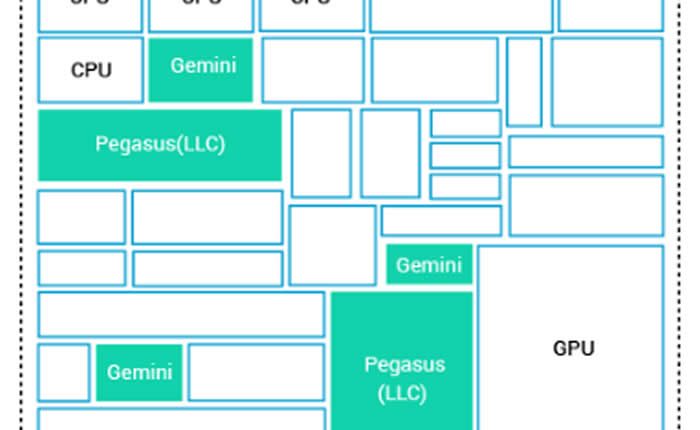

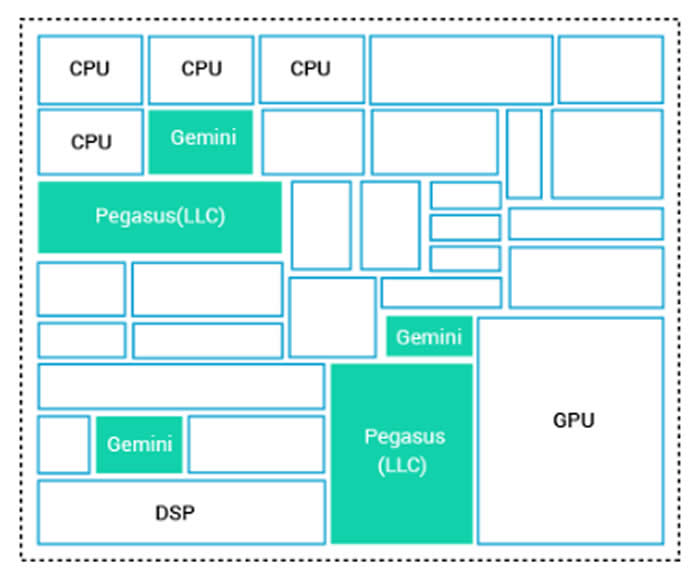

“Processors are becoming increasingly specialized to meet the needs of their target applications,” said Linley Gwennap, Principal Analyst at the Linley Group. “To satisfy complex application requirements, many SoCs now include a mix of CPU cores, computing clusters, GPUs and other computing resources and specialized accelerators. To maximize the performance of these heterogeneous designs, SoCs need a robust on-chip-network such as Gemini that optimizes the communication among the various components and arbitrates the sharing of memory and other critical resources.”

“Gemini 3.0 is a next generation system-on-chip (SoC) interconnect platform that specifically addresses the complexities and the opportunities of heterogeneous system architectures,” said Anush Mohandass, Vice President of marketing and business development at NetSpeed. “Gemini enables SoC architects to implement designs that can achieve more than 10x greater performance in a reasonable power envelope. This is something that is not feasible with traditional multicore designs.”

“When designing a SoC, the conventional approach is to architect the system with its IP blocks and interconnect and simulate it later in the development process when most of the design decisions have been made,” said Fred Weber, former AMD CTO and industry veteran who is a member of NetSpeed’s board. “This is essentially a trial and error approach that is expensive, time-consuming and risky. Gemini, on the other hand, enables system architects to perform modelling and simulation much early in development before integration begins. NetSpeed’s machine learning capabilities rapidly explore a multitude of models and architecture options to give system architects accurate system level performance predictions right from the beginning.”

Gemini is the only SoC interconnect solution that uses machine learning to accurately model the system as whole to achieve the best application performance. In contrast, conventional approaches tend to optimize individual subsystems in isolation, which can result in bottlenecks and systems that are overdesigned to handle worst case conditions. Gemini uses advanced networking algorithms to rapidly create a cache-coherent SoC interconnect that is deadlock-free and delivers quality of service (QoS) for all use cases. It offers OEMs an easier and more cost effective way to assemble robust heterogeneous SoCs that provide the performance necessary for rich and complex applications.

Gemini 3.0 offers unprecedented configurability allowing users to customize every component of the interconnect from IP interface to routers to topology and interface links.

- Gemini 3.0 supports both the ARM AMBA 5 CHI (Coherent Hub Interface) and ARM AMBA 4 AXI Coherency Extensions (ACE) on-chip interconnect standards in a single design and includes support for broadcast and multicast which can dramatically improve performance.

- It supports up to 64 fully cache-coherent CPU clusters, GPU blocks and other coherent compute blocks and up 200 I/O coherent and non-coherent agents.

- It can handle cache-coherent, I/O-coherent, and non-coherent traffic in a single SoC interconnect design platform.

- It offers unique system level optimizations including integrated DMA, on-chip RAM and Last Level Cache (LLC) IPs with runtime configurability.

Comments are closed, but trackbacks and pingbacks are open.