Since robots are expected to perform a multitude of tasks that involve fitting into tight spaces and picking up fragile objects, they need to come equipped with some knowledge of their hand’s precise location.

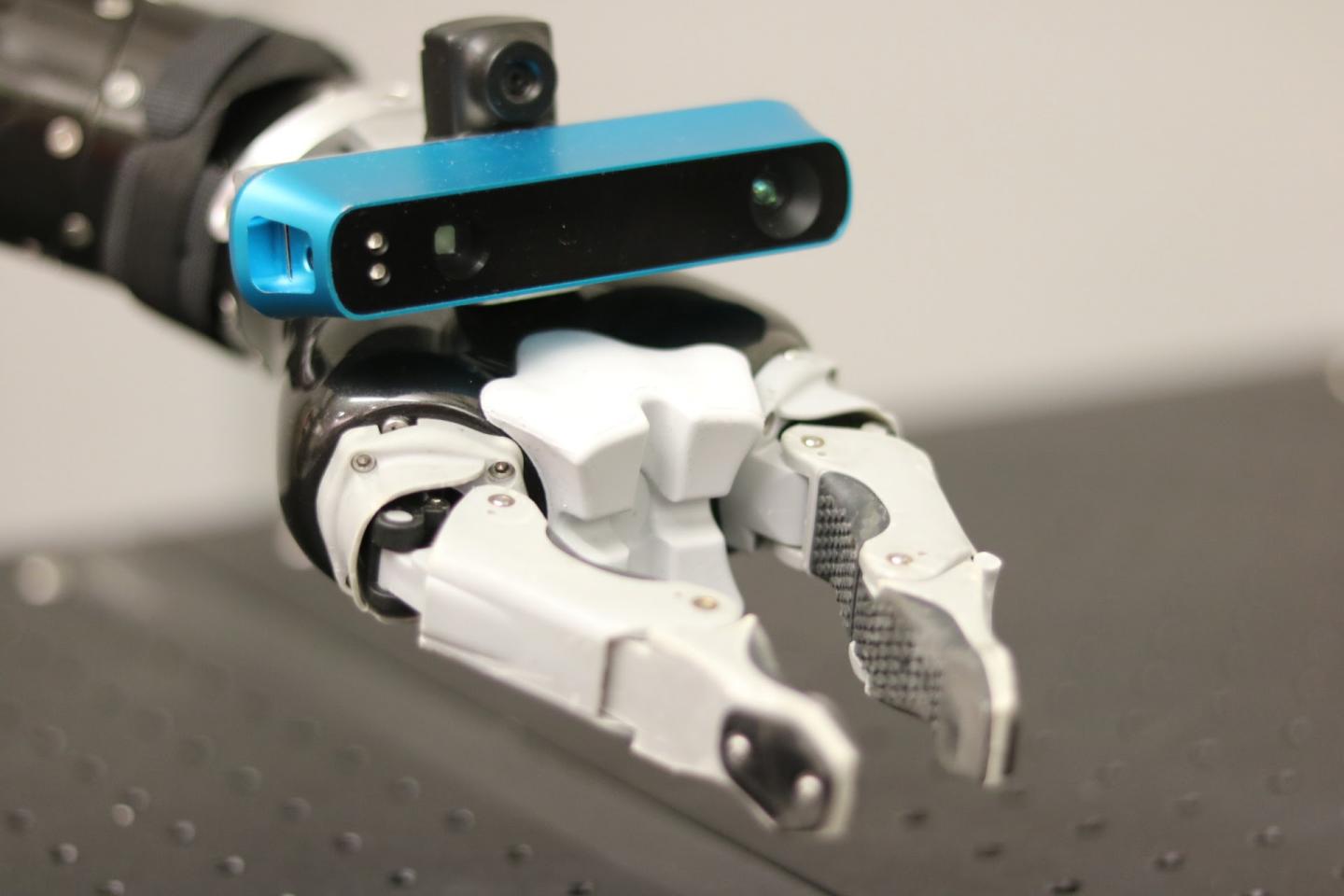

This is why researchers at Carnegie Mellon University’s Robotics Institute have attached a camera to a robot’s hand. By doing so, the robot’s hand “eye” can quickly create a 3D model of its environment and also locate the hand within that 3D world.

To make this method even more efficient, the CMU team improved the accuracy of the map by incorporating the arm itself as a sensor and using the angle of its joints to better determine the pose of the camera.

According to Siddhartha Srinivasa, associate professor of robotics at CMU, placing a camera or sensor within the hand of a robot has become a feasible task now that sensors are smaller and more power-efficient.

This new vision method can be seen as an important step toward allowing robots to perform more human-like tasks, because robots typically have heads equipped with a camera on top, which does not permit them to bend over like a person an get a better view of a work space.

And while the idea of placing a camera in the hand of a robot is great, it won’t do much good if the robot can’t see its hand and doesn’t know where its hand is relative to objects in its environment.

This is a problem all mobile robots suffer from when operating in an unknown environment. It’s a problem shared with mobile robots that must operate in an unknown environment. One solution for mobile robots in this predicament is simultaneous localization and mapping, also called SLAM, in which the robot pieces together input from sensors such as cameras, laser radars and wheel odometry to create a 3D map of the new environment and to figure out where the robot is within that 3D world.

“There are several algorithms available to build these detailed worlds, but they require accurate sensors and a ridiculous amount of computation,” said Srinivasa.

By automatically tracking the joint angles, the system produces a high-quality map — even if the camera is moving quickly or if some of the sensor data is missing.

The researchers demonstrated their Articulated Robot Motion for SLAM (ARM-SLAM) with a small depth camera attached to a lightweight manipulator arm. When they used this method to build a 3D model of a bookshelf, they found that it produced reconstructions that were equal, or in some cases even better than, other mapping techniques.

In using it to build a 3-D model of a bookshelf, they found that it produced reconstructions equivalent or better to other mapping techniques.

“We still have much to do to improve this approach, but we believe it has huge potential for robot manipulation,” said rinivasa.

“Automatically tracking the joint angles enables the system to produce a high-quality map even if the camera is moving very fast or if some of the sensor data is missing or misleading,” Klingensmith said.

Comments are closed, but trackbacks and pingbacks are open.