Researchers make infrared tech less expensive

Australian researchers have come up with a way to make infrared technology, responsible for night and fog vision devices, much less expensive.

Their breakthrough could potentially save millions of dollars for the defense industry. Infrared devices can run up to $100,000 and can even require some cooling intervention down to -200°C.

But now, the team, led by researchers at the University of Sydney, has demonstrated a dramatic increase in the absorption efficiency of light in a layer of semiconductor that is only a few hundred atoms thick — bringing light absorption to almost 99% current 7.7%.

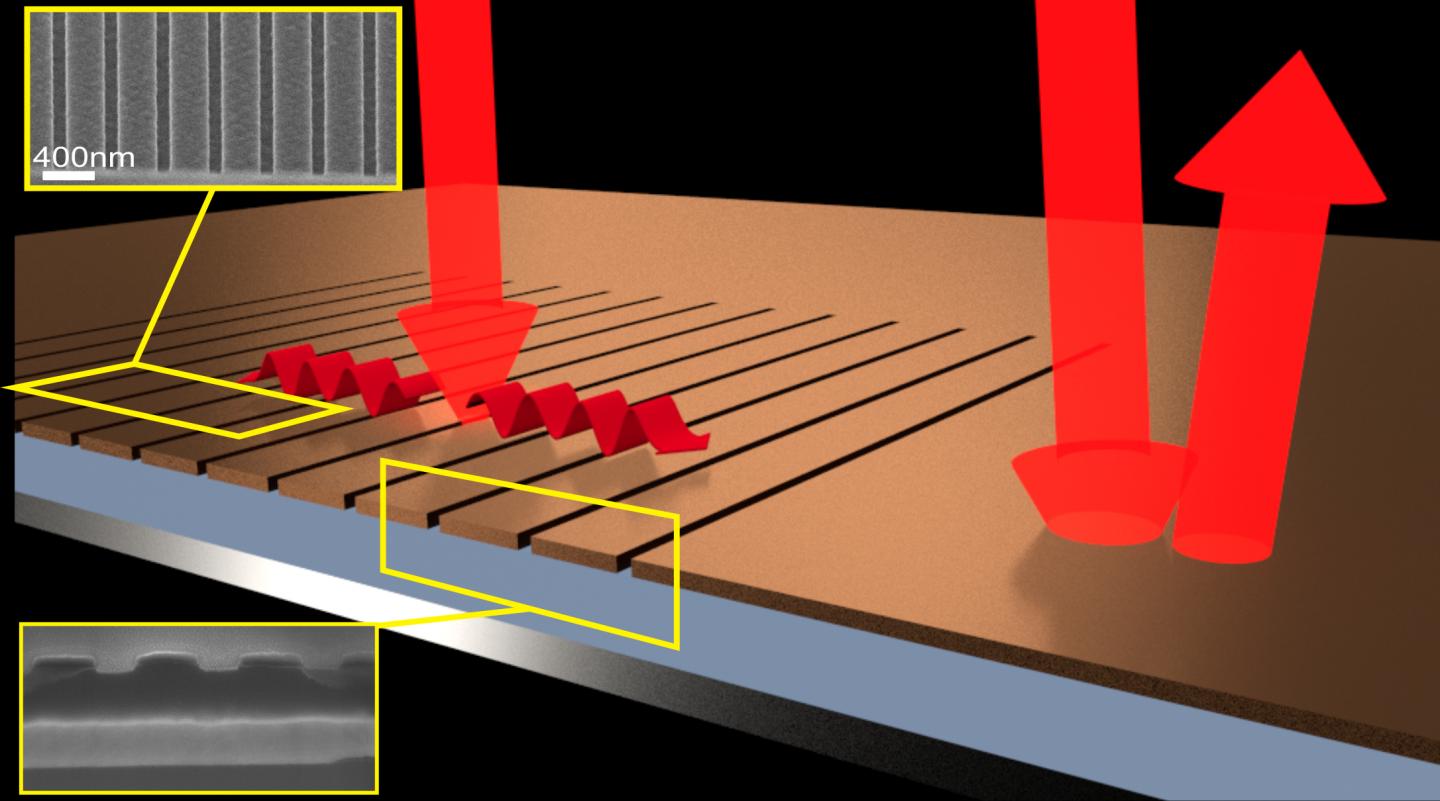

According to University of Sydney’s School of Physics’ Professor Martijn de Sterke, the team discovered that perfect thin film light absorbers could be created simply by etching grooves into them.

“Conventional absorbers add bulk and cost to the infrared detector as well as the need for continuous power to keep the temperature down. The ultrathin absorbers can reduce these drawbacks,” said de Sterke.

When thin grooves are etched into the film, the light is directed sideways and almost all of it is absorbed, even though the absorbing layer is only less than 1/2000th the thickness of a human hair.

Infrared technology isn’t the only area that could benefit from perfectly absorbing ultra-thin films, though. Additional potential applications include defense technology, autonomous robots, medical tools, and consumer electronics.

According to Professor Lindsay Botten, Director of Australia’s National Computational Infrastructure (NCI), the structures were much simpler to design and fabricate than using existing thin film light absorbers, which require either complex nanostructures, meta-materials and exotic materials or difficult combinations of metals and non-metals.

“There are major efficiency and sensitivity gains to be obtained from making photo-detectors with less material,” said Lindsay.

Story via Eurekalert.

Comments are closed, but trackbacks and pingbacks are open.