Robots—Observation Leads to Empathy

Columbia University School of Engineering and Applied Science engineers created a robot that can visually predict how a partner robot will behave—and the result is a smidgeon of empathy.

Columbia’s “Robot Theory of Mind” concept predicts that robots will get along with other robots—and humans—more intuitively. And researchers anticipate that this empathy will grow.

Until now, robots have remained incapable of social communication. Columbia’s robot, however, has learned to predict a partner robot’s future actions and goals by using a few video frames. The goal of the research, led by Mechanical Engineering Professor Hod Lipson, is to give robots the ability to understand and anticipate the goals of other robots, purely from visual observations.

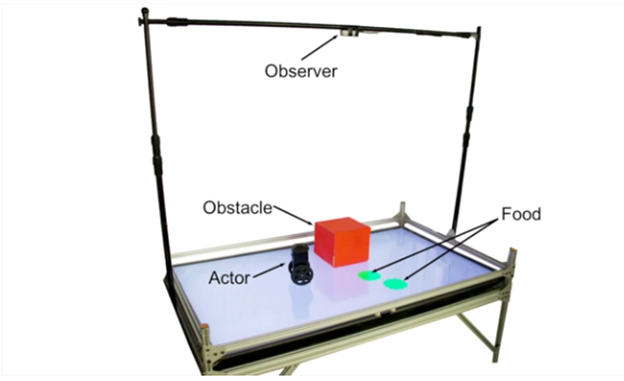

In this case, researchers built a robot and placed it in a playpen roughly 3×2 feet, and programmed it to seek and move towards any green circle it could see. Sometimes the robot could see a green circle in its camera and move towards it. At other times, the green circle would be hidden by a tall red cardboard box. The robot would move towards a green circle or remain still.

The observing robot began to anticipate its partner’s goal and path and was eventually able to predict its partner’s goal and path 98 out of 100 times, across varying situations—without being told explicitly about the partner’s visibility handicap.

The findings, published in Nature Scientific Reports, demonstrate that robots can observe the world from another robot’s perspective. It can understand, without being guided, whether its partner could or could not see the green circle from its vantage point. Their conclusion is that this is a primitive form of empathy. The level of accuracy was unexpected. The research team believes that this is the start of robots having what cognitive scientists call “Theory of Mind” (ToM).

For humans, at approximately age three, children begin to understand that others may have different goals, needs, and perspectives than they do. This can lead to playful activities such as hide and seek and also sophisticated manipulations like lying. Theory of Mind is recognized as a key distinguishing hallmark of human and primate cognition, essential for complex and adaptive social interactions such as cooperation, competition, empathy, and deception.

The technology will make robots more resilient and useful, but when robots can anticipate how humans think, they may also learn to manipulate those thoughts. So, will empathy lead to manipulation, or will a way be found to keep them in check?