What Does it Mean When a Robot Smiles?

According to Columbia Engineering researchers that are using AI to teach robots appropriate reactive human facial expressions, it may mean that the robot will earn your trust. In a study titled “Smile Like You Mean It: Driving Animatronic Robotic Face with Learned Models,” supported by National Science Foundation NRI and DARPA MTO grants, robots used in nursing homes, warehouses and factories, must be more facially realistic.

Researchers in the Creative Machines Lab at Columbia Engineering, after five years of work, created EVA, an autonomous robot with a soft and expressive face that responds to match the expressions of nearby humans. Since people attempt to humanize the robots that help them or that they work with, the researchers decided to add an expressive and responsive human face. Adding the features, however, is not easy.

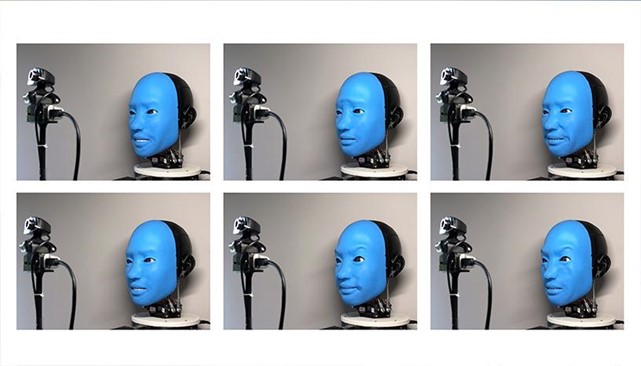

The first phase took place when undergraduate student Zanwar Faraj led a team of students in building the robot’s physical “machinery.” They built EVA as a disembodied bust with a strong resemblance to the silent but facially animated performers of the Blue Man Group. EVA expresses six basic emotions—anger, disgust, fear, joy, sadness, and surprise, and more nuanced emotions, by using artificial “muscles” (i.e. cables and motors) that pull on specific points on EVA’s face, mimicking the movement of 42 tiny muscles attached at various points to the skin and bones of human faces.

To overcome size challenges, the team relied heavily on 3D printing to manufacture parts with complex shapes that integrated seamlessly and efficiently with EVA’s skull. After the team mastered EVA’s “mechanics,” they addressed programming the artificial intelligence that would guide EVA’s facial movements. Here, Lipson’s team made two technological advances. EVA uses deep learning artificial intelligence to “read” and then mirror the expressions on nearby human faces. EVA’s ability to mimic human facial expressions is learned by trial and error from watching videos of itself. To teach EVA what its own face looked like, the team filmed hours of footage of EVA making a series of random faces. Then, like a human watching herself on Zoom, EVA’s internal neural networks learned to pair muscle motion with the video footage of its own face. EVA acquired the ability to read human face gestures from a camera, and to respond by mirroring that human’s facial expression.

One day, robots capable of responding to a wide variety of human body language will be useful in workplaces, hospitals, schools, and homes.

Original Release: Columbia